There is a silent failure mode in SEO.

It doesn’t trigger a manual action. It doesn’t cause an overnight traffic collapse. Instead, visibility weakens gradually. You publish strong content, but impressions stall. Crawling slows. New pages struggle to surface.

This is Technical Trust breaking.

In 2026, search systems are resource-constrained. Before evaluating content quality, they first decide whether a site is reliable to crawl, render, and interpret. When the technical foundation introduces friction, exposure is reduced quietly and consistently.

This article explains where Technical Trust breaks, how crawl confidence is lost, and how to restore it.

Technical Trust Explained at a Glance

What is happening?

Search visibility weakens even when content quality improves.

Why is it happening?

Search systems now restrict crawling and extraction when sites are inefficient, unreliable, or costly to process.

What breaks first?

Technical efficiency and crawl confidence break before rankings visibly decline.

Can this be reversed?

Yes, but only by removing friction that reduces crawl interest and indexing confidence.

This article builds on the earlier parts of the series. How Semantic SEO Works in 2026 and Why Keywords Alone No Longer Rank explains how search systems evaluate meaning and intent before technical signals matter. Why Ranking #1 No Longer Delivers Visibility and How Search Works Now shows why visibility can decline even when rankings appear stable. When SEO Stops Working and What Breaks outlines the systemic failures that usually come first. When those layers weaken, Technical Trust becomes the bottleneck, and crawl, indexing, and extraction slow without obvious warnings.

Technical Efficiency Breaks When Crawl Interest Drops

Crawling is no longer governed by a fixed crawl budget. It is governed by crawl interest.

Crawl interest reflects how valuable and efficient previous crawls have been. When crawlers encounter slow rendering, broken links, redirect chains, or low-value pages, they reduce crawl depth and frequency.

What breaks

Crawl efficiency and prioritization.

Symptom

New or updated pages remain uncrawled for weeks. “Last crawled” dates lag far behind publication.

Fix

Increase crawl yield by removing waste:

- Permanently remove dead URLs using proper redirects or 410 responses

- Reduce click depth so priority pages are reachable within three clicks

- Remove low-value pages from internal navigation

Google documents this behavior directly in its crawling guidance from Google Search Central:

https://developers.google.com/search/docs/crawling-indexing

Index Quality Breaks When Low-Value Pages Compete

Index control determines which pages are allowed to compete for visibility.

When thin, duplicated, or utility pages are indexed alongside high-value content, authority is diluted across the site.

Common sources of index pollution include:

- Internal search result pages

- Thin tag or archive pages

- Print-friendly or alternate versions

- Parameterized URLs and session IDs

What breaks

Index clarity and authority concentration.

Symptom

Indexed page counts far exceed the number of pages that actually deliver value.

Fix

Use noindex deliberately. Allow crawling where necessary, but restrict indexing to pages that deserve visibility.

Internal Linking Logic Breaks Topical Flow

Internal linking controls how relevance and authority move through a site.

When links are placed randomly or purely for keyword variation, topical signals fragment. Authority pools on a few pages while related content remains invisible.

What breaks

Topical signal distribution and crawl prioritization.

Symptom

One page ranks broadly while closely related pages never gain traction.

Fix

Use a hub-and-spoke structure:

- Pillar pages link to all supporting subtopics

- Subtopics link back to the pillar

- Related subtopics cross-link only when contextually aligned

This creates a predictable topical map that search systems can trust.

Rendering Reliability Breaks When Content Is Hidden

Search systems must reliably access primary content to evaluate it.

JavaScript-heavy frameworks often delay or obscure content behind client-side execution, deferred loading, or user interaction.

What breaks

Content accessibility and extraction reliability.

Symptom

Rendered HTML in URL Inspection is missing core text or primary elements.

Fix

Ensure critical content appears in the initial HTML using server-side rendering or reliable hydration. If essential content does not appear quickly and predictably, it may not be evaluated.

Mobile Performance Breaks Trust First

Mobile performance is now the primary evaluation environment.

Search systems assess crawling, rendering, and usability almost entirely through mobile contexts.

What breaks

Usability trust and crawl confidence.

Common failure signals

- Layout shifts during load

- Overlapping or obstructive elements

- Small or crowded tap targets

- Performance optimized only for desktop

Symptom

Core Web Vitals fail on mobile despite acceptable desktop performance.

Fix

Test on mid-range mobile devices under real network conditions. Optimize for the slowest realistic user, not the fastest device.

Machine Readability Breaks Without Structured Data

Structured data removes ambiguity by explicitly describing content types and relationships.

Without it, search systems must infer meaning. In competitive environments, inference loses to clarity.

What breaks

Machine interpretation and eligibility for enhanced visibility.

Symptom

Competitors receive rich results while your content does not.

Fix

Implement JSON-LD structured data for:

- Articles and authorship

- FAQs where appropriate

- Breadcrumbs for hierarchy clarity

Schema does not guarantee visibility, but its absence guarantees ambiguity.

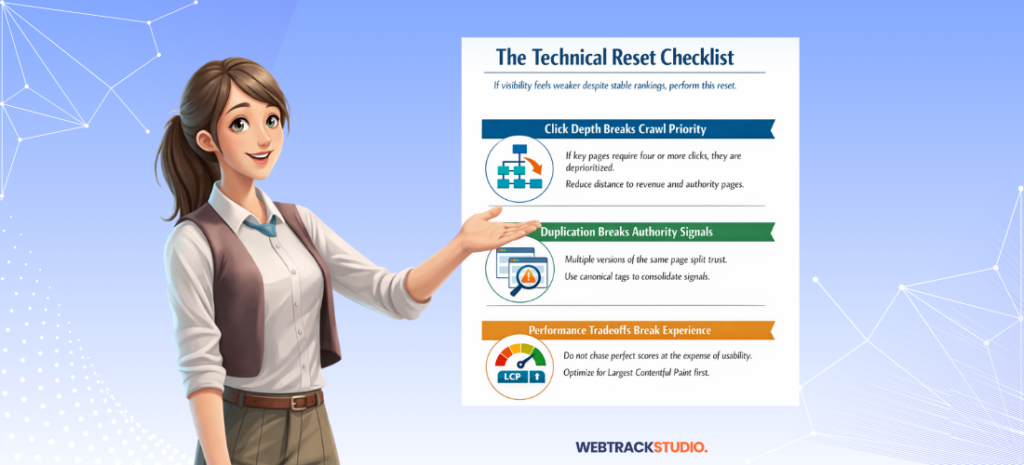

The Technical Reset Checklist

If visibility feels weaker despite stable rankings, perform this reset.

Click Depth Breaks Crawl Priority

If key pages require four or more clicks, they are deprioritized.

Duplication Breaks Authority Signals

Multiple versions of the same page split trust. Use canonical tags to consolidate signals.

Performance Tradeoffs Break Experience

Optimize for Largest Contentful Paint first. Do not chase scores at the expense of usability.

Frequently Asked Questions About Technical Trust

What happens when Technical Trust is low?

Crawling slows, indexing becomes selective, and visibility is limited even if rankings appear stable.

How long does it take to restore crawl confidence?

Improvements often appear within weeks after crawl friction is removed, but full recovery depends on site size and history.

Can strong content overcome technical issues?

No. Content is evaluated only after technical reliability is established.

Is Technical Trust a ranking factor?

It is not a single metric. It is a prerequisite system that determines whether ranking evaluation occurs.

The Bottom Line

Technical SEO is not about exploiting systems. It is about removing doubt.

When crawlers arrive, they should not struggle to load content, interpret structure, or navigate unnecessary complexity.

Sites that are technically predictable earn crawl confidence. Crawl confidence leads to faster indexing, broader extraction, and stronger visibility across modern search surfaces.

Stop fighting the crawler. Start enabling it.

What Comes Next

Part 5 explains how search systems evaluate topical authority across an entire site, not just individual pages.

If rankings look stable but results feel weaker, visibility is likely breaking upstream.

Request a Technical Trust Review

A diagnostic review identifies crawl friction, index dilution, and extraction gaps that suppress visibility before users ever see your content.